OpenMind launches OM1 Beta open-source, robot-agnostic operating system

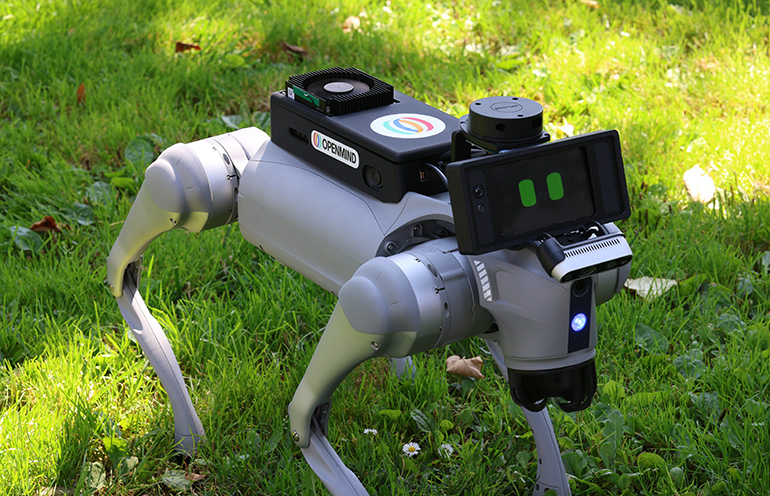

OpenMind says OM1 Beta enables robots to perceive, reason, and act without proprietary limitations. Source: OpenMind

Robotics has long been fragmented, with developers forced to choose between closed ecosystems, hardware-specific software, or steep learning curves, according to OpenMind AGI. The company today announced the beta release of OM1, which it claimed is “the world’s first open-source operating system for intelligent robots, a universal platform that allows any robot to perceive, reason, and act in the real world.”

OpenMind asserted that its OM1 platform enables robots of all forms to perceive, adapt, and act in human environments. The San Francisco-based company said its FABRIC decentralized coordination layer creates secure machine identity and powers a global network for smart systems to collaborate.

Together, OM1 and FABRIC enable machines to operate across any environment while maintaining security and coordination at scale, said OpenMind. It added that the release provides “a shared foundation that that accelerates development, fosters interoperability, and unlocks a new era of machine intelligence.”

“OM1 gives developers a shortcut to the future,” said Boyuan Chen, chief technology officer of OpenMind. “Instead of stitching together tools and drivers, you can immediately start building intelligent behaviors and applications.”

OpenMind supports robot autonomy

OpenMind listed the following features in its beta release:

Hardware-agnostic: The operating system works across quadrupeds, humanoids, wheeled robots, and drones.

AI model integrations: OM1 Beta offers plug-and-play support for OpenAI, Gemini, DeepSeek, and xAI.

Voice + Vision: OpenMind said its platform enables natural communication through speech-to-text (Google ASR), text-to-speech (Riva, ElevenLabs), and vision/emotion analytics.

Preconfigured agents: These are ready to use for popular platforms like the Unitree G1 humanoid, Go2, TurtleBot, and Ubtech mini humanoid.

Autonomous navigation: The technology allows real-time simultaneous localization and mapping (SLAM), lidar support, and Nav2 for path planning.

Simulation support: This includes Gazebo integration for testing before deploying on hardware.

Cross-platform: OpenMind’s software runs on AMD64 and ARM64, delivered via Docker for fast setup.

OM1 Avatar: This is a modern React-based front-end application that provides the user interface and avatar display system for OM1 robotics software.

The beta release allows developers to prototype voice-controlled quadrupeds in minutes or test collaborative navigation with real-time mapping and obstacle avoidance. Developers can also use OM1 Beta to deploy humanoid robots that integrate language models for natural interaction and simulate behaviors in Gazebo before deploying them to the real world, said OpenMind.

OM1 Beta release is available now

“Robotics has reached a tipping point. Billions of dollars have been invested in hardware, but without a universal intelligence layer, robots remain difficult to program, siloed by manufacturer, and underutilized,” said OpenMind.

OM1 Beta is now available on GitHub, and setup guides and community support are available in its documentation hub.

By making its release open-source, OpenMind said it can lower barriers to entry without proprietary lock-in, foster developer collaboration, enable interoperability across robot types and manufacturers, and make robotics adoption as fast as software development.

“Robots shouldn’t just move — they should learn, adapt, and collaborate,” said Jan Liphardt, CEO of OpenMind. “With this release, we’re giving developers the foundation to make that a reality. Just as Android transformed smartphones, we believe an open OS will transform robotics.”

OpenMind has collaborated with DIMO Ltd. on enabling machines such as autonomous vehicles to communicate as a step toward smart cities. Last month, the company raised $20 million.

Editor’s note: RoboBusiness 2025, which will be on Oct. 15 and 16 in Santa Clara, Calif., will feature tracks on physical AI, enabling technologies, humanoids, field robotics, design and development, and business. Registration is now open.