How robots learn to handle the heat with synthetic data

A thermal camera can capture data to help train robots for a wide range of scenarios. Source: Bifrost AI

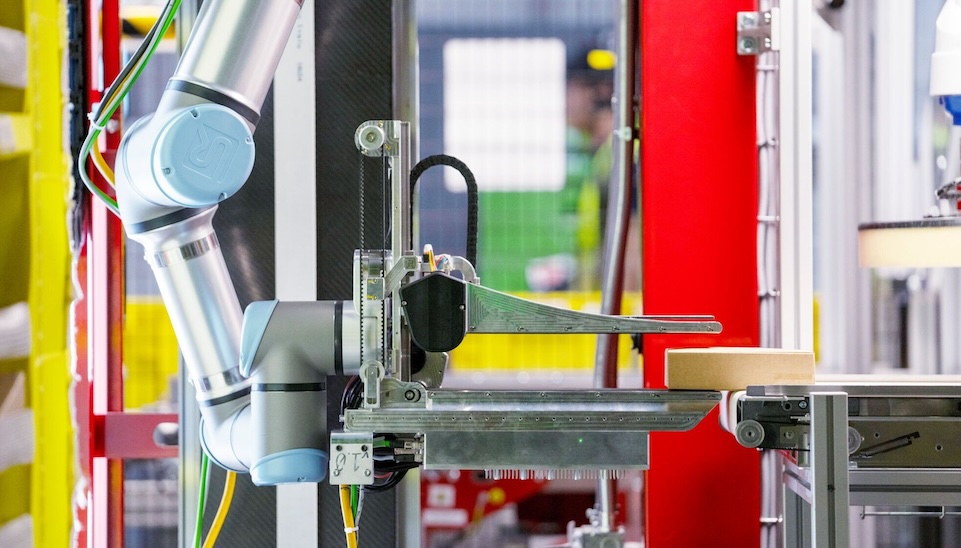

Robotics teams have typically needed huge amounts of data to train and evaluate their systems. As demand has grown, the systems have become more complex, and the quality bar for real-world and synthetic data has only gone up.

The problem is that most real-world data is repetitive. Fleets capture the same empty streets, the same calm oceans, the same uneventful patrols. The useful moments are rare, and teams spend months digging for them.

The challenge isn’t just collecting edge cases. It’s also getting full coverage across seasons, lighting, weather, and now across different sensors—including thermal, which becomes essential when visibility drops.

No team can wait a year for the right season or create thousands of real collisions just to gather data. Even the largest fleets can’t capture every scenario they need. Reality just doesn’t produce enough variety fast enough.

So teams are turning to synthetic data. They can generate the exact scenarios they need on demand, from ice covered roads to rare hazards that appear once a year. They can also create thermal versions of these scenes, giving robots the examples they need to learn to see when light disappears.

Synthetic data gives robotics teams the coverage reality won’t deliver, at the speed modern autonomy requires.

Synthetic data exposes robots to real-world scenarios

Training autonomous systems on synthetic data—computer generated scenarios that replicate real-world conditions—gives robots a way to learn about the world before they ever encounter it. Just as a child can learn to recognize dinosaurs from watching Jurassic Park, computer vision models can learn to identify new objects, environments, and behaviors by training on simulated examples.

Synthetic datasets can provide rich, varied, and highly controlled scenes that help robots build an understanding of how the world looks and behaves across the full range of situations they might face.

Seeing beyond color

Robots, like humans, use more than standard cameras to understand the world. They rely on lidar, radar, and sonar to sense depth or detect objects. When visibility drops at night or in fog, they switch to infrared.

The most common infrared sensor is the thermal camera. It turns heat into images, letting robots see people, vehicles, engines, and animals even in total darkness.

To train these systems well, teams need synthetic thermal data that captures the full range of heat patterns robots will face in the field.

Synthetic thermal data shines in high-risk applications

Synthetic thermal data matters most in places where collecting real-world thermal footage is too dangerous or too rare. Defense and industrial systems operate in messy, unpredictable environments, and they need coverage that reality can’t reliably provide.

Autonomous vessels at sea: Fog, spray, and darkness are normal at sea. Thermal makes people, boats, and coastlines stand out when RGB cameras go blind.

Drones at night: Gathering thermal data for emergency night flights or collision avoidance in cluttered terrain is risky and expensive. Synthetic thermal lets drones learn to navigate in zero light, through smoke, fog, and dense vegetation where traditional cameras fail.

Satellites tracking heat signatures: Atmospheric noise and sensor limits mean satellites can’t capture every thermal scenario on Earth. Synthetic thermal fills the gaps for weather forecasting, climate monitoring, and disaster response, strengthening the models these satellites rely on.

Synthetic thermal data lets teams build robots 100x faster

Teams are already generating synthetic datasets for rare or hard to capture scenarios on demand instead of waiting months for field data. This shift has pushed iteration speeds up to 100x in some cases and cut data acquisition costs by as much as 70% when paired with real-world datasets.

Adding synthetic thermal data can make these gains even bigger. By working with the world’s best simulation partners, we’ve been able to build a high-quality thermal pipeline that delivers these speed and cost advantages straight to the teams building the next generation of physical AI.

Which is the future—synthetic or real data?

Teams need both real and synthetic data, as we’ve seen from working with some of the most advanced robotics groups in the world, from NASA’s lunar rover teams to Anduril’s field autonomy teams. They collect huge amounts of real-world data, but much of it is repetitive.

The issue isn’t quantity; it’s coverage. The goal is to find the gaps and biases in those real datasets and fill them with targeted synthetic data.

This hybrid approach offers teams a stronger, more complete data strategy. By combining the nuance of real missions with the precision and scale of synthetic generation, robotics teams can build systems ready for the hardest conditions and the low-probability scenarios every robot will eventually face.

About the author

About the author

Charles Wong is the co-founder and CEO of Bifrost AI, a synthetic data platform for physical AI and robotics teams. Bifrost generates high-fidelity 3D simulation datasets that help customers train, test, and validate autonomous systems in complex real world conditions.

Wong and his team work with organizations such as NASA Jet Propulsion Laboratory and the U.S. Air Force to create rich virtual environments for planetary landing, maritime domain awareness, and off-road autonomy.

Responses